Funding: German Research Foundation (DFG)

Grant number: 500490184

Project duration: 01.09.2023 - 31.12.2025

Collaborating University: Ruhr Universität Bochum (RUB), Chair for Production System (LPS)

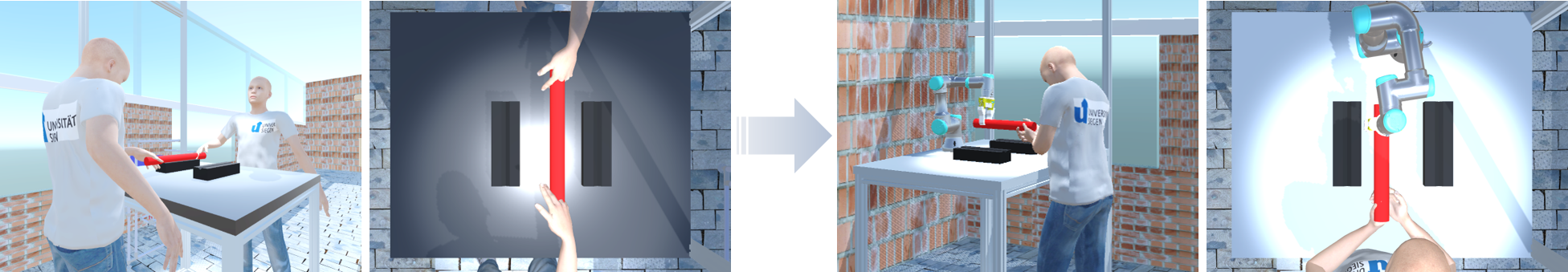

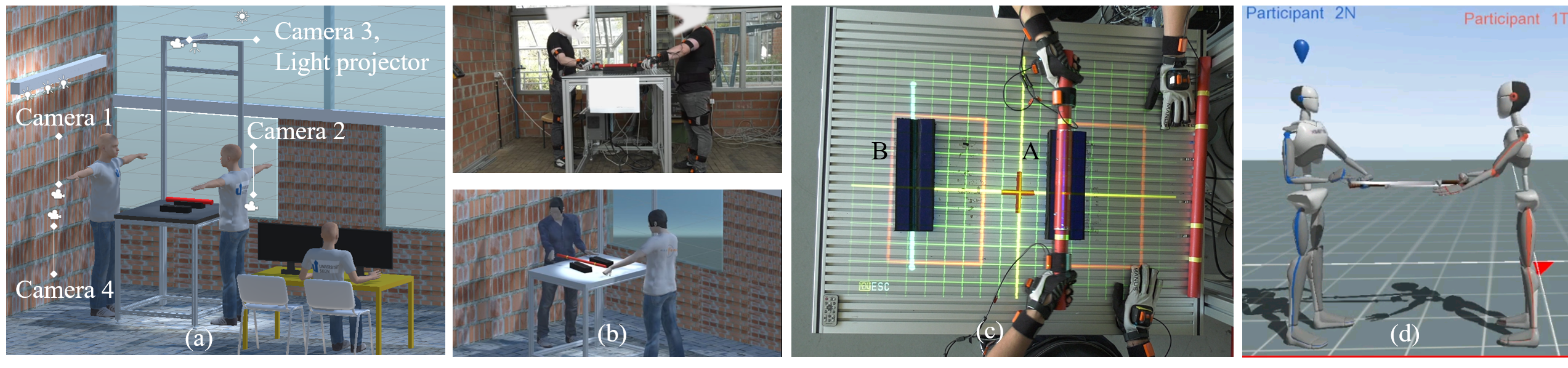

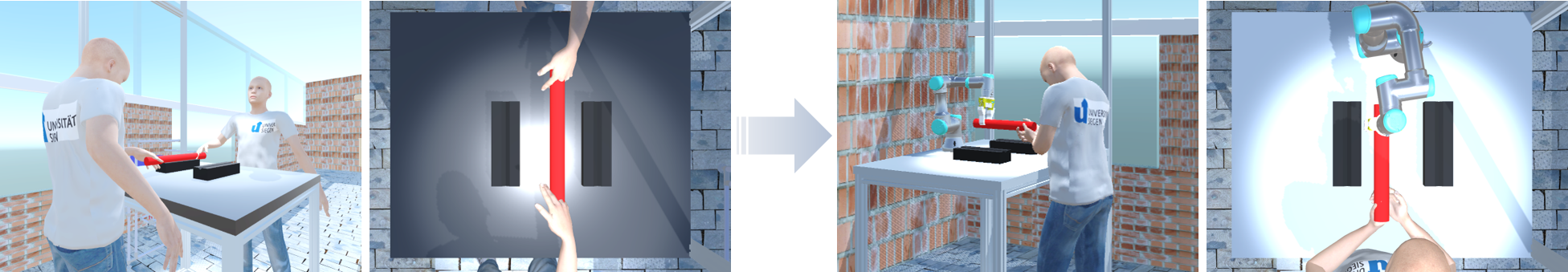

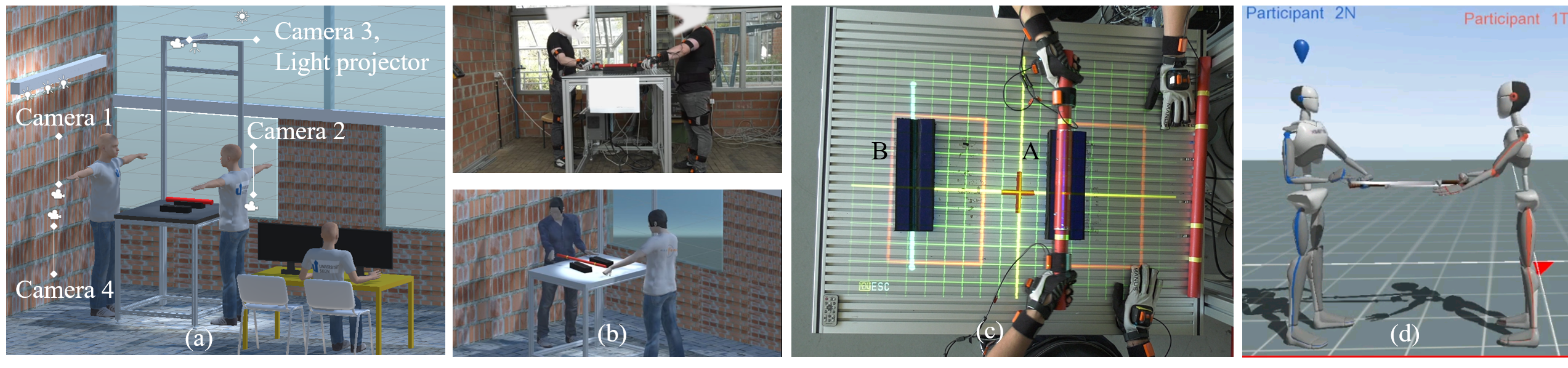

Today, the capabilities of human-robot collaboration (HRC) are widely investigated for manufacturing processes, mainly in lab environments. However, they are not well applied in production systems. Collaborative handling of an object is one of the current challenges regarding HRC’s real collaboration capabilities. In this context, it is still unclear how robots and humans should behave to handle an object and anticipate for mutual care. The speed of the robot tool center point (TCP) that depends on the human motion might create high joints speeds, which can create potential risk to human body parts. In this regard, robots have been represented using kinematic and dynamic models of rigid bodies that yield a structured and controlled motion profile compared to data-driven human motion generation techniques.

To investigate real-time HRC motion coupling and harmonization methods that highly anticipate human motion behavior with high mutual care.

In HiSMoT aspect, the following basic questions may lead to investigation;

Human motion model using latent space features for pick and place is successfully created using Morphoble Graph libraries, and the motions are analyzed in the digital twin.

Latent space controller for position control is promising for coupling human and robot model.

Univ.-Prof. Dr.-Ing. Martin Manns

Chair leader, FAMS - Manufacturing Automation and Assembly

E-mail: martin.manns@uni-siegen.de

Dr.-Ing. Tadele B. Tuli,

Chief Engineer (Collaborative robotics), FAMS

E-mail: tadele-belay.tuli@uni-siegen.de

Dr. Raza Saeed,

HiSMoT Project leader, FAMS

E-mail: raza.saeed@uni-siegen.de

Funding: German Research Foundation (DFG)

Grant number: 500490184

Project duration: 01.09.2023 - 31.12.2025

Collaborating University: Ruhr Universität Bochum (RUB), Chair for Production System (LPS)

Today, the capabilities of human-robot collaboration (HRC) are widely investigated for manufacturing processes, mainly in lab environments. However, they are not well applied in production systems. Collaborative handling of an object is one of the current challenges regarding HRC’s real collaboration capabilities. In this context, it is still unclear how robots and humans should behave to handle an object and anticipate for mutual care. The speed of the robot tool center point (TCP) that depends on the human motion might create high joints speeds, which can create potential risk to human body parts. In this regard, robots have been represented using kinematic and dynamic models of rigid bodies that yield a structured and controlled motion profile compared to data-driven human motion generation techniques.

To investigate real-time HRC motion coupling and harmonization methods that highly anticipate human motion behavior with high mutual care.

In HiSMoT aspect, the following basic questions may lead to investigation;

Human motion model using latent space features for pick and place is successfully created using Morphoble Graph libraries, and the motions are analyzed in the digital twin.

Latent space controller for position control is promising for coupling human and robot model.

Univ.-Prof. Dr.-Ing. Martin Manns

Chair leader, FAMS - Manufacturing Automation and Assembly

E-mail: martin.manns@uni-siegen.de

Dr.-Ing. Tadele B. Tuli,

Chief Engineer (Collaborative robotics), FAMS

E-mail: tadele-belay.tuli@uni-siegen.de

Dr. Raza Saeed,

HiSMoT Project leader, FAMS

E-mail: raza.saeed@uni-siegen.de